Disclaimer: This post is aimed at retro-gaming preservation and code-archeology. All product names, trademarks, and registered trademarks are the property of their respective owners.

933 days, this is the amount of time that passed since part 1 of that blog post. I had almost all work done back in 2018, I was just missing one small detail about the palette applied to the rendered frames. Then life happened and I pretty much abandoned not only this blog but all of my side projects. Around the beginning of February 2021, I felt a sudden urge to finish that project and I dug up all of my notes and source codes and started moving forward. The sources, obviously, didn’t meet my quality bar after all these years, but I took the time to modernize them a bit, so it is less of a shame to make them public.

Before I start, I encourage old and new readers to take a look at part 1, as this post is a continuation and it may be easier to follow along being familiar with the prior research.

One more thing that is worth mentioning that I didn’t know in 2018 is that MK1 and MK2 executables have embedded Watcom compiler symbols. I learned about it just recently when I was looking for some recent development with regard to MK reverse engineering efforts:

- https://www.reddit.com/r/REGames/comments/e7tbq9/decompiling_mortal_kombat/

- https://github.com/fonic/wcdctool

I didn’t use the wcdctool as the list of steps to make it work is quite long and I have most of the executable described in IDA anyway. The most important learning I took from wcdctool is the existence of wdump utility (Open Watcom Executable Image Dump Utility) that can dump all available symbols. I used that tool to apply original functions/symbols names in IDA (on top of my own naming), so I may sometimes refer to those original names, provided they are better than the one I came up with.

uGRA file format

I named that format uGRA, which stands for uncompressed GRA in contrast to the cGRA (compressed GRA). The truth is that uGRA still has some form of compression, but the entropy is not too high, especially compared to cGRA. The file format itself is not well defined and it doesn’t exist without matching mortal kombat executable, as all metadata is hardcoded in the exe file. I’ll first start with describing the sprite structure and the method of finding them in the executable. Later I’ll give some details about the compression algorithm.

To properly decode data from uGRA file I need to locate three components:

- GRA file id – this is the index into gra_entries (original name _handleTable) array that I briefly described in part 1. All sprites definitions are correlated with the actual GRA file by that id.

- Sprites descriptors – very simple structure that contains file id, offset in the file, width, height, x, and y.

- Palette(s) – for simplicity I try to locate all possible palettes definitions, since different sprites may use different palettes within one uGRA file. In theory, it could be possible to write a parser that would automatically assign a proper palette to most of the sprites, but I marked it as infeasible, given the limited time I have and only marginal benefits.

Identification of GRA file id

Having a GRA input file there are only 2 pieces of information that can be used to locate a file id: file name and file size.

struct FileEntry {

char *filename;

int filesize;

char flags;

char padding[3];

int unk2_0;

char *buffer;

int unk2_2;

};

FileEntry structure in theory contains both, but the file name is represented as an offset to the actual file name, so using it requires parsing LE file format to get proper memory layout. Using the file size only seems a lot easier, but may generate multiple file ids in case there are multiple files with the same size (there are). I went down the file size road in my implementation, discarding the incorrect file ids is done later during the sprites decoding. The algorithm is not very sophisticated, I basically scan the MK1 executable looking for the 32bit value that represents the GRA file size, then I verify if the value may belong to the FileEntry structure:

The verification process is implemented as follows:

def isValidPartial(self) -> bool:

return (self.flags == 0x12 and self.unk0 == 0 and self.buffer == 0

and self.unk1 == 0)

def isValid(self) -> bool:

return (self.file_name_offset != 0 and self.file_size != 0

and self.isValidPartial())

If the file size is correctly identified as a part of the FileEntry structure I scan back memory to find the beginning of the _handleTable (using the same validation method for each entry and stopping when it fails). File id is the index to the _handleTable.

Locating sprites descriptors

There are two types of sprites descriptors:

struct SpriteEntry

{

__int16 width;

__int16 height;

__int16 x;

__int16 y;

__int32 file_id_offset;

};

struct SpriteEntryNoXY

{

__int16 width;

__int16 height;

__int32 file_id_offset;

};

SpriteEntry is used for the sprites that are part of animation and require x, y values to correctly position a frame. SpriteEntryNoXY is a simplified sprite descriptor for static elements (for example parts of the UI). Looking for potential sprite descriptors in the executable is rather false-positive prone, even with strict criteria. I assume no information about the GRA file id or file size during that phase, just collect everything that may correspond to some sprite and filter it later for the specific GRA file when needed.

Criteria I’m using in the parser:

- 0 < width <= 320 – MK resolution is 320×240

- 0 < height <= 240

- -256 <= x,y <= 256 – I picked 256 based on a few random sprites, it seems to check out.

This yields 28k sprites in 256 different file ids, obviously, most of it is incorrect, but joined with the file id, file size, and pixel decoding it actually works quite well. At least I haven’t noticed any false positives in the final output.

Locating palettes

Palletes are defined as follows:

struct Palette

{

__int16 length;

__int16 colors[];

};

Now, a generic search for anything that resembles a palette generates a lot of noise. Some basic filtering helps, but I’m still not very happy with the outcome. Limiting the search to the data section only would yield better results, but as I mentioned earlier I didn’t want to deal with the LE file format, so it is what it is.

Criteria I’m using in the parser:

- 2 <= length < 256 – Mode 13h is 256 colors

- none of the colors is greater or equal to 0x8000 – as mentioned in part 1, MK is using only 15 bits to define colors.

- number of unique colors is greater or equal to 2/3 of all defined colors – at the beginning, I was enforcing all colors to be unique, but it turns out some pallets have duplicates :( in a lot of cases values 0x0000 and 0xFFFF, but I found some other random colors as well.

There is further filtering applied based on the actual sprite, namely: do not show palettes with fewer colors than the sprite is using.

Image decompression

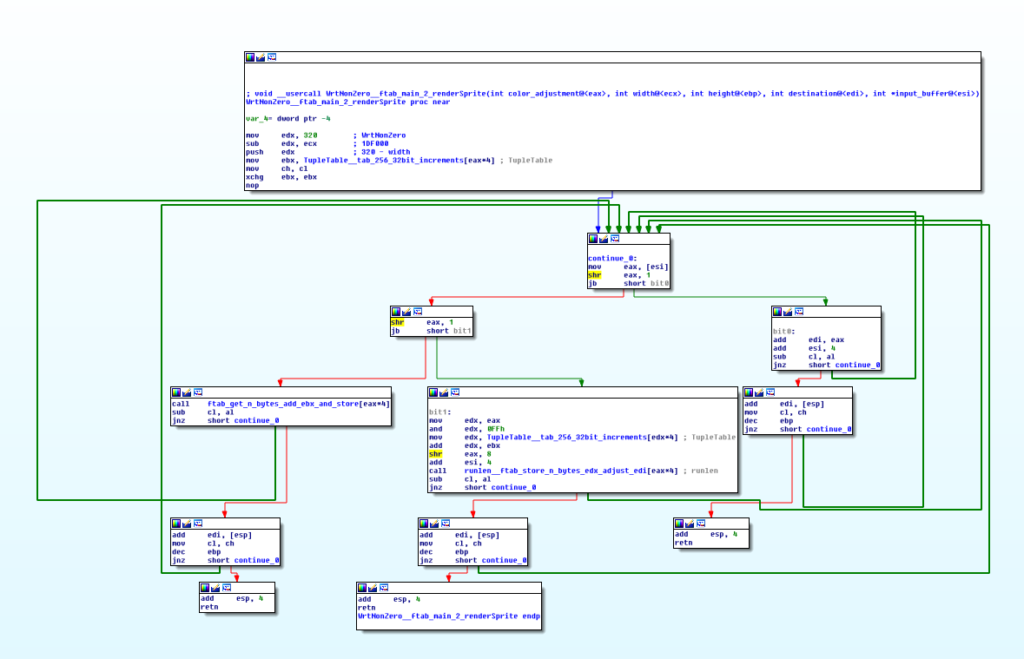

The original name of that function is WrtNonZero, which either tells everything or nothing, depending on who is reading. To me it is nothing, that is why I called it __ftab_main_2_renderSprite, which is at the same level of being wrong as WrtNonZero. The function is a very simple decoder (decompressor?), I’m wary of calling it decompression since the input data is quite sparse:

The algorithm can be described with a few steps:

- Read 32bit value.

- If the first bit is set, move the output pointer by N, where N is the 31 bits left after shifting right the initial 32bit value by 1. Effectively this is the “transparent” pixel.

- If the second bit is set, treat the next 8 bits as a color value and the rest (22 bits) is the number of times, the color should be repeated in the output.

- If none of the above, copy N bytes from the input to the output, where N is 30 bits left after initial double shift right.

- repeat

In most cases the 32bit value is almost “empty”, thus the input is very sparse. There is an additional 32bit input alignment in step 4, which adds even more zero bytes to the input. I may only guess that it was done to optimize sprites rendering, after all the code is 32 bits, so probably reading data in 32-bit chunks makes sense, plus the logic is a lot simpler.

MK engine trivia

IRQSKYE

In the beginning, I mentioned that I had some problems with palettes and this is where I stopped my research in 2018. It turned out that the missing piece was actually very small, the palettes stored in the executable are not 0-based, but 1-based. Color 0 is pre-determined by the game engine and is stored in a global variable called IRQSKYE. I didn’t follow that lead, so I can’t speculate about the exact use case of that color, I’m just happy that setting it to anything makes everything work.

Mode 13h color conversion

The first blog post mentioned that the 16bit colors are in fact 15 bits and it is evenly split to form an RGB value that is later used in Mode 13h. That statement is not correct, even though the images produced by the previous algorithm look ok, there might be some minor color discrepancies. This time I did the job and I found the actual code responsible for setting the Mode 13h palette (TRANSFER_PALETTES):

MULT = 255.0 / 63

@cache

def Convert15to24bitRGB(v: int) -> mktypes.Color:

r = int(round((((v >> 9) & 0x3F) | 1) * MULT))

g = int(round((((v >> 4) & 0x3F) | 1) * MULT))

b = int(round((((v << 1) & 0x3F) | 1) * MULT))

return mktypes.Color(r, g, b)

Palette shift

The code responsible for pixel decoding (WrtNonZero) has one extra argument that I called palette_shift. Value of that argument is added to each decoded pixel, this allows the game to render sprites with different palettes in one scene (as long as the total number of colors doesn’t exceed 256).

Multiple sprite renderers

The game has an array of 128 functions that can be used for actual sprite decoding, most of the entries are just empty, some functions appear in the array multiple times, I did not investigate why it was designed that way, but I did an analysis of some of those renderers/decoders and here are my findings (I’ll use the function names found in symbols):

- WrtNonZero – this is the most important one, described in the “Image decompression” paragraph and reimplemented in my parser.

- WrtConstNonZ – iiuc, this one writes the const color instead of the pixels encoded in the sprite, so it is kind of erase sprite action(?). The output has the shape of the character/sprite.

- WrtConst – writes the single color rectangle using the width and height of the sprite.

- fWrtNonZero – same as WrtNonZero but the output is mirrored (So the characters can move in both directions on the screen).

- fWrtConstNonZ – mirror view of WrtConstNonZ.

- ShadConstNonZ – the algorithm is very similar to WrtConstNonZ, but it skips the rows, effectively making the sprite shorter. It is responsible for creating the shadow of the character.

- fShadConstNonZ – mirror view of ShadConstNonZ.

- ClpWrtNonZero

- ClpWrtConstNonZ

- ClpWrtConst

- fClpWrtNonZero

- fClpWrtConstNonZ

- ClpShadConstNonZ

I did not analyze the Clp prefixed functions, but I guess they might do some clipping on top of the already described functionality?

Crazy optimizations

All of the renderers/decoders are quite compact in terms of code size. On top of that, all basic operations performed by decoders are either unrolled or vectorized.

Vectorization example: there is an array called TupleTable, it contains 256 32bit “increment” values like: 0x00000000, 0x01010101, 0x02020202, 0x03030303 etc. Whenever the decoder needs to store the same pixel value multiple times it loads it from that table and the palette shift is also loaded from there, thus it can adjust 4 pixels at a time.

Loop unrolling example: there are 4 different arrays, each contains 65 function pointers, each function is responsible for doing some operation that otherwise could be a simple loop, but here it is unrolled the number of times corresponding to the index in the array. The functionality of the arrays:

- store N same bytes (value is already vectorized in EDX register)

- copy N bytes and apply palette shift (palette shift is always vectorized in EBX register)

- a mirror version of the store

- a mirror version of the copy

uGRA Viewer

The screenshot at the top of that post is the final version of the uGRA viewer, it allows previewing GRA sprites and exporting static and animated images. Palette lookup is a bit cumbersome, but it should have the correct palette most of the time. It works with both MK1 and MK2 executable/GRA files. Hosted as usual on my GitHub:

Damn big homie you mad smart my g. Iono how you rbos be out here learning this shit.. But mad props for the documenting it

I heard some developer comment in an interview that the format was convoluted as hell… I honestly never thought someone would reverse engineer it. Kudos!

Great work! Have you found any unused graphical contents yet?

You should REALLY take at look at my tool (moved to https://github.com/fonic/wcdatool). It would’ve helped you a lot with this, e.g. telling you where the palettes are located and the correct FileEntry mapping (as it decodes references within the Linear Executable).

I recently uploaded the latest version.

Genius. Now, I learn in 2022 topics which you have learnt around 2007. I’m old HTB fan :-)